TANGO BLOGPOST

In an era where machine learning (ML) models increasingly influence critical decisions, ensuring their trustworthiness and explainability is essential. From autonomous driving to real-time medical diagnosis and high-frequency trading, decisions often need to be made in seconds or even in milliseconds. Yet, the complexity of ML models, especially deep learning systems, renders their internal workings opaque. The Prefetched Offline Explanation Model (POEM) address these challenges, providing timely, interpretable insights while maintaining accuracy and reliability.

The Challenge of Explainable AI in Real-Time Applications

The adoption of ML models in sensitive domains introduces ethical and technical hurdles. Trustworthy AI demands transparency, particularly in scenarios where decisions directly impact human lives or financial systems. Traditional methods of explainability, such as LIME and SHAP, while effective, often fail to meet the stringent time requirements of real-time applications. Moreover, generating high-quality explanations often involves substantial computational overhead, making them impractical for dynamic, interactive environments.

Introducing POEM

POEM, developed through collaboration between ISTI-CNR and the University of Pisa, is a model-agnostic framework designed to generate local explanations for image classification tasks. Unlike its predecessor ABELE, POEM is tailored for time-sensitive scenarios, leveraging an innovative offline and online explanation pipeline. This dual-phase approach significantly accelerates the process of generating insights while ensuring the quality of explanations remains uncompromised.

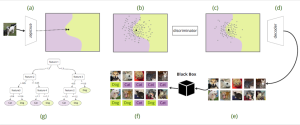

Figure 1: ABELE workflow, starting from the mapping of the instance x to the extraction of the transparent model: (a) instance x encoded within the latent space; (b) neighbourhood generation around x; (c) discriminator filtering the neighbourhood; (d) decoder transforming the points into images (e) annotated by the black box; (f) supervised data forming a local training set to (g) learn a decision tree.

In the offline phase, POEM constructs an explanation base by precomputing detailed models for representative points within the data distribution. Each model captures factual and counterfactual rules, enabling the generation of exemplars, counter exemplars, and saliency maps. These components provide users with intuitive visual and logical explanations of model decisions.

During the online phase, POEM retrieves precomputed explanations for new instances by matching them with the most similar points in the explanation base. This retrieval-based mechanism drastically reduces the time required to generate explanations, making it ideal for real-time applications. If a close match is unavailable, POEM defaults to the ABELE pipeline, ensuring robust fallback support.

Key Innovations and Methodology

POEM builds upon adversarial autoencoders (AAEs) to map input images into a latent space, facilitating efficient neighbourhood generation and decision-tree-based explanations. The latent space, structured to reflect the underlying data distribution, enables the creation of synthetic instances that serve as exemplars or counter exemplars. These instances illustrate the boundaries of model decisions, offering users actionable insights.

The novel offline phase optimizes the computationally intensive aspects of explanation generation, allowing the online phase to operate with unparalleled speed. Furthermore, POEM introduces enhanced algorithms for selecting exemplars and counter exemplars, prioritizing interpretability and user relevance. Saliency maps, generated through refined exemplar selection, highlight the critical features influencing model predictions, aiding in transparency and trust.

Experimental Validation: Speed and Quality Combined

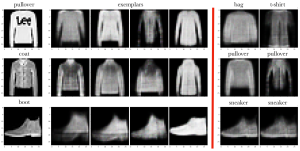

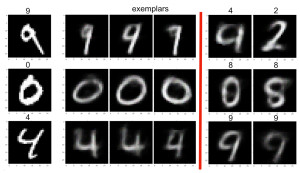

Extensive experiments on benchmark datasets, including MNIST, FASHION, and EMNIST, show POEM’s superiority over traditional methods. POEM achieves execution time reductions of up to 96.5% compared to ABELE, depending on the dataset and classifier. For instance, generating an explanation for MNIST using a Random Forest classifier takes on average just 17 seconds with POEM, compared to over 300 seconds with ABELE. This efficiency stems from the high hit rate in the explanation base, ensuring most instances retrieve precomputed models.

In addition to speed, POEM excels in explanation quality. Its exemplars and counter exemplars provide visually coherent and semantically meaningful insights into model behaviour. Saliency maps generated by POEM consistently highlight the most relevant features for classification, as validated through deletion experiments.

Figure 2: Three examples of explanations for instances of the MNIST (left) and FASHION (right) datasets. On the left, the image to be classified, with the class assigned by the black box on top. In the middle, a set of exemplars. On the right, two counter exemplars for each instance.

Transforming Explainable AI for the Future

POEM’s contribution extends beyond efficiency. By decoupling the computationally intensive steps from real-time operations, it paves the way for scalable, explainable AI systems in diverse domains. Its modular design supports adaptation to other data types, such as time series and tabular data, making it a versatile tool for future applications.

As the demand for transparent and trustworthy AI grows, POEM represents a significant step toward building systems that inspire trust without compromising performance. By enabling quick, actionable explanations, it ensures that AI remains a valuable ally in high-stakes decision-making environments.

Written by: Carlo Metta, Anna Monreale, Lorenzo Mannocci, Salvatore Rinzivillo, University of Pisa (UNIPI)