Ethical AI in Surgery: Policy Lessons from the ALTAI Framework Implementation

The integration of artificial intelligence into surgical decision-making represents a critical juncture for healthcare policy. A study conducted during the first 18 months of the Tango project, examining AI-assisted surgery for Abdominal Aortic Aneurysms offers valuable insights into how we can ensure ethical AI deployment through systematic assessment frameworks.

Surgical decision-making encompasses complex choices across preoperative planning, intraoperative conduct, and postoperative management (Latifi 2016). Current clinical decision-support systems struggle with labor-intensive data entry and variable accuracy (Bain et al., 2024), creating an urgent need for more sophisticated solutions. Yet the question facing policymakers is not simply whether to integrate AI, but how to ensure its deployment upholds fundamental healthcare principles.

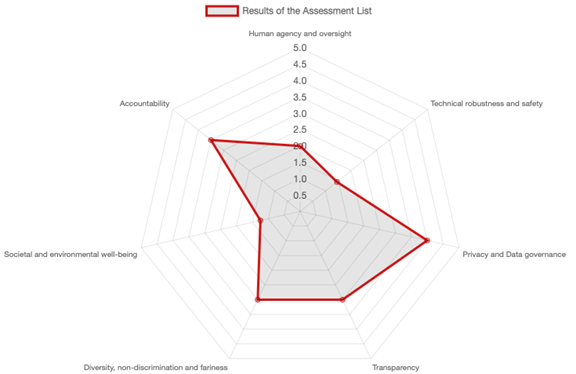

The Assessment List for Trustworthy Artificial Intelligence (ALTAI), developed by the EU’s High-level Expert Group on AI, provides a comprehensive framework for evaluating AI systems across seven dimensions. What makes the AAA surgery case study particularly illuminating is how it demonstrates both the promise and the challenges of implementing this framework in practice.

The ALTAI evaluation revealed a nuanced picture of AI readiness in surgical settings. Human agency and oversight achieved a perfect score of 5.0, demonstrating that the system successfully maintains surgeon control, a critical finding that addresses widespread concerns about AI replacing clinical judgment. Technical robustness scored 4.5, showing reliability suitable for high-stakes medical environments, while privacy and data governance reached 4.0, indicating GDPR compliance with room for improvement.

However, the lower scores tell an equally important story. Transparency lagged at 3.0, revealing that the “black box” nature of AI decision-making remains problematic for clinical accountability. Explainable AI is essential for building trust in healthcare settings (Jeyaraman et al. 2023). The diversity and fairness score of 3.5 raises concerns about potential bias perpetuation, while the environmental well-being score of just 2.5 suggests sustainability remains a substantial challenge in medical AI development.

These findings have profound policy implications. The transparency gap indicates that regulatory frameworks must mandate minimum explainability standards for AI systems in clinical settings. Healthcare professionals need to understand and, when necessary, challenge AI recommendations. This isn’t merely a technical requirement but a fundamental aspect of maintaining professional accountability and patient trust.

The moderate fairness score highlights another critical policy area. Sahiner et al. (2023) warn about data drift in medical machine learning, where AI systems trained on specific populations may perform poorly on others. This suggests the need for continuous monitoring mandates and regular bias audits, ensuring AI systems serve all patient populations equitably.

The study’s emphasis on “virtual bargaining” between AI and human decision-makers introduces novel challenges for medical liability frameworks (Levine et al. 2024). When an AI system and a surgeon collaborate on a decision, who bears responsibility if something goes wrong? Current liability frameworks weren’t designed for this level of human-machine integration, creating a policy vacuum that needs urgent attention.

Importantly, the research demonstrates that ALTAI assessment shouldn’t be a one-time certification process. The framework needs to be integrated throughout the AI lifecycle, from initial design through deployment and continuous monitoring (Bogucki, 2025). This mirrors pharmaceutical regulation, where drugs undergo initial approval but remain subject to post-market surveillance.

The AAA surgery case provides a concrete example of how this might work in practice. The AI system assists across all surgical phases: analyzing patient anatomy for procedural planning, providing real-time guidance during surgery, and predicting post-operative complications. Each phase presents different ethical challenges and requires tailored oversight mechanisms.

Healthcare institutions implementing AI systems need clear governance structures. The study’s establishment of an advisory group including ethicists and legal experts points toward a model where AI governance committees become standard in hospitals, similar to existing institutional review boards. These committees would oversee not just initial implementation but ongoing performance and ethical compliance.

The variation in ALTAI scores across dimensions reveals that current regulatory frameworks are insufficient for governing medical AI. While some aspects like human oversight are well-addressed, others like transparency and fairness lag behind. This suggests the need for comprehensive, AI-specific healthcare regulations that establish minimum standards for each ALTAI dimension and create clear certification processes for different risk categories.

Successful AI integration requires careful attention to situated human-AI decision making (Wilson et al. 2024). The technology must fit within existing clinical workflows while enhancing rather than disrupting professional judgment. This balance is delicate and requires both technological sophistication and regulatory wisdom.

The path forward demands collaborative effort between policymakers, healthcare institutions, and technology developers. We need regulatory frameworks that promote innovation while safeguarding patient interests and maintaining the irreplaceable value of human clinical judgment. The ALTAI framework provides a robust starting point, but its implementation in the AAA surgery case reveals just how much work remains to be done. The future of surgical AI depends not just on technological advancement but on our ability to create governance structures that ensure its ethical, transparent, and equitable deployment.

Written by: Artur Bogucki, Centre for European Policy Studies (CEPS)

Balch, J.A., et al. (2024). Integration of AI in surgical decision support: improving clinical judgment. Global Surg Educ 3(56).

Bogucki, Artur. “Applied Trustworthy Data and Artificial Intelligence Governance in Precision Agriculture.” Engineering and Value Change. Cham: Springer Nature Switzerland, 2025. 101-124.

Jeyaraman, M., et al. (2023). Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 15(8), e43262.

Latifi, R. (2016). The Anatomy of the Surgeon’s Decision-Making. In: Surgical Decision Making. Springer, Cham.

Levine, S., et al. (2024). When rules are over-ruled: Virtual bargaining as a contractualist method of moral judgment. Cognition 250, 105790.

Sahiner, B., et al. (2023). Data drift in medical machine learning: implications and potential remedies. Br J Radiol. 96(1150), 20220878.

Wilson, B., et al. (2024). Designing for Situated AI-Human Decision Making: Lessons Learned from a Primary Care Deployment. Proceedings of the 1st International Workshop on Designing and Building Hybrid Human–AI Systems.